In the early 70's came algorithmic reverbs. Basically, an acoustic engineer can look at any blueprint and tell you what the reverb will be based on the early and late reflections timing, what the waterfall frequency is, and how long (R60) the reverb tail. That in a nutshell is algorithmic reverbs.

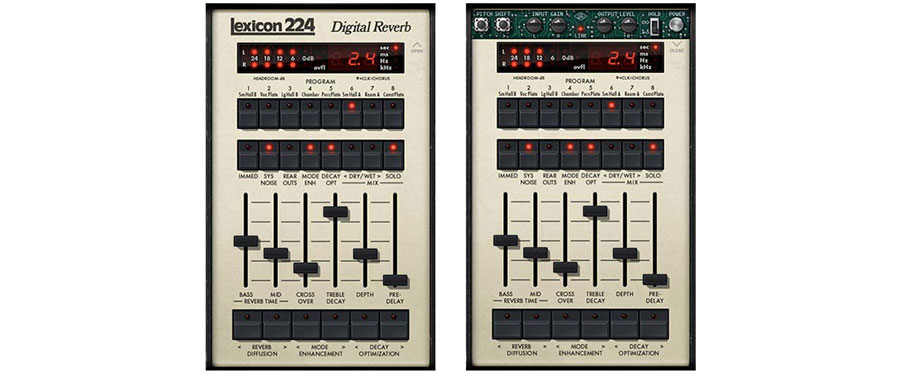

Originally algorithmic reverbs didn't emulate specific rooms; it was more of a general here is a Dark Hall, a live Church, a plate, etc. That is key when it comes to understanding algorithmic vs. convolution. Algorithmic reverbs emulate what is actually happening in the room, in the sense of how the decay tail changes. What really happens in a room is: Take a pop gun, fire it off, and capture the frequencies generated. Do it again. Compare the two frequency contents of the tails and they’ll be different. It’s a small difference, but important. This is due to how the sounds reflect and how the walls absorb the sound. Algorithmic reverbs modulate the tail. Lexicon, one of the pioneers of reverb processors, did this first and it’s one reason why they’ve dominated the field for so long.

Most of the mixes you hear on the radio today still use algorithmic reverbs. Why? Because they’re more neutral. Meaning they don't put you into St. Marks church, but they put you in a space you feel like is a church. So, even though it is artificial rendering of an imagined space, the tails modulate, so the way the sound responds is a bit more natural to our ears.

There are 0 comments